Egemen Sert

METU Computer Engineering

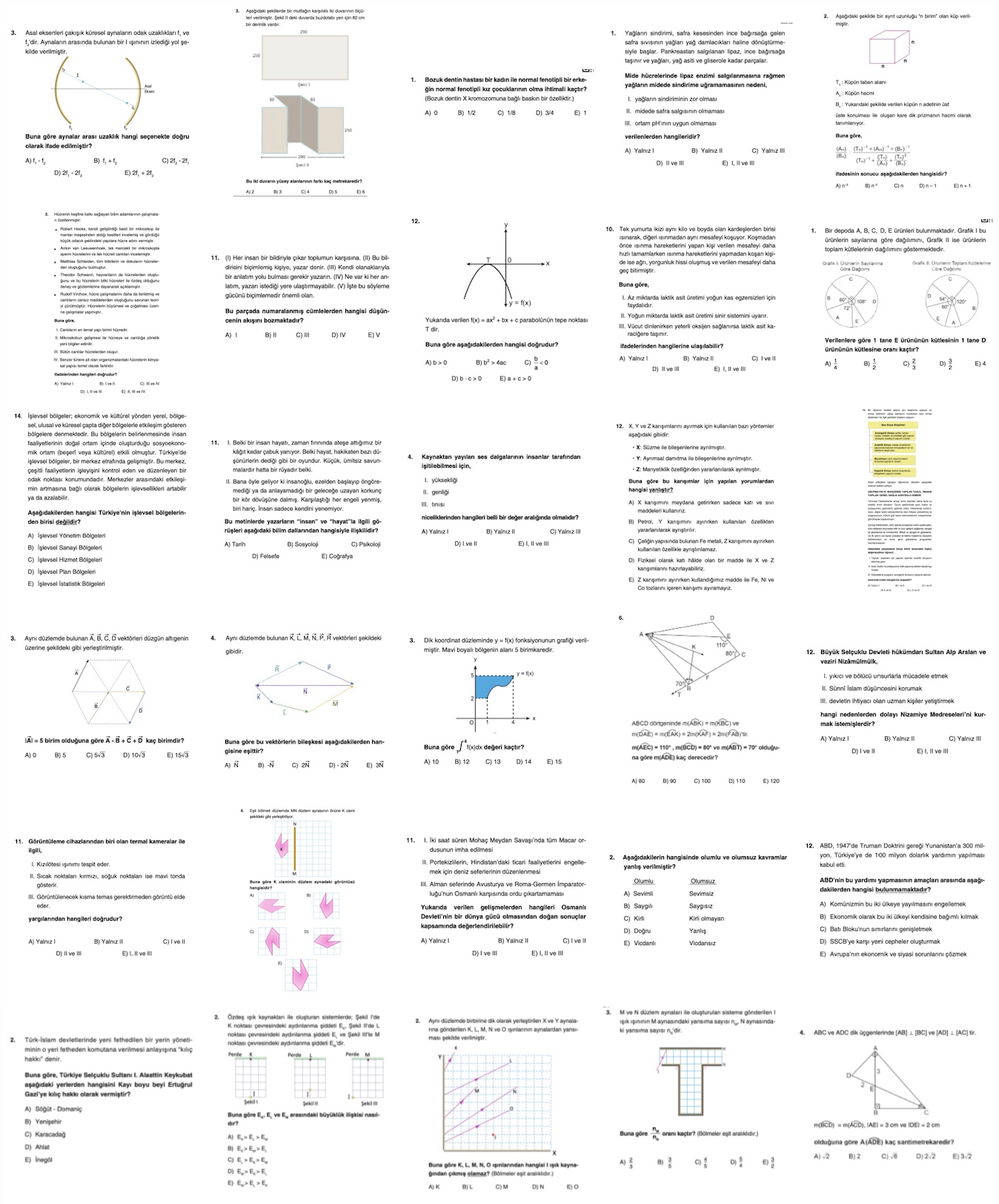

A balanced multimodal benchmark covering the Turkish high school curriculum, with equal representation across all topics.

Large Language Models (LLMs) and Large Multimodal Models (LMMs) demonstrate impressive problem-solving skills across many tasks and domains. However, their ability to reason over structured, curriculum-based educational questions—particularly in the context of Turkish high school entrance examinations—has not been systematically studied.

To address this gap, we introduce YKS Uniform, a balanced multimodal benchmark covering the Turkish high school curriculum with equal representation across all topics. By sampling six questions per topic, we constructed a dataset of 1,854 multimodal questions spanning both TYT and AYT exams. These questions require deep reasoning over text, diagrams, and exam-style contexts.

Using this benchmark, we conducted a comprehensive evaluation of 10 open-weight and proprietary models. Our results highlight both the strengths and limitations of current models in handling exam-style reasoning tasks. The best-performing system, Gemini-2.5-Flash, achieved an overall accuracy of %84.7, substantially higher than open-weight alternatives but still leaving a measurable gap to human-level performance.

Contributions of YKS Uniform:

YKS Uniform provides the first systematic lens into multimodal reasoning across the entire Turkish high school curriculum. We hope it will serve as a foundation for future research in educational AI, curriculum-grounded reasoning, and robust model evaluation in multimodal contexts.

The YKS Uniform dataset contains 1,854 multimodal questions

sampled evenly across all topics in the Turkish high school curriculum.

It is designed exclusively as a test benchmark.

test set.Qwen-2.5VL-32B on it. Our fine-tuned model, DLM QMSA,

achieved 78.59% accuracy, ranking 3rd overall

and outperforming all OpenAI models.

| Rank | Model | Date | All | Turkish | History | Geography | Philosophy | Math | Physics | Chemistry | Biology | Literature | History | Geography | Philosophy | Math | Physics | Chemistry | Biology |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 1 | Gemini 2.5 Flash 🥇 | 2025-07-17 | 84.7 | 77.8 | 84.2 | 86.5 | 93.3 | 85.1 | 73.1 | 84.7 | 86.7 | 84.8 | 90.4 | 91.7 | 86.9 | 85.4 | 72 | 88.2 | 87.7 |

| 2 | Gemini 2.0 Flash 🥈 | 2025-02-05 | 79.2 | 67.5 | 84.2 | 84.9 | 90 | 72.6 | 62 | 79.2 | 75.6 | 82.6 | 87.8 | 89.6 | 79.8 | 85.4 | 70.5 | 75.5 | 81.6 |

| 3 | METU DLM QMSA 🥉 | 2025-07-31 | 78.6 | 66.7 | 91.2 | 90.5 | 94.4 | 58.3 | 70.4 | 81.9 | 81.1 | 77.3 | 83.3 | 90.6 | 85.7 | 73.6 | 67.4 | 81.4 | 83.3 |

| 4 | OpenAI o3 | 2025-04-16 | 74.5 | 65.9 | 82.5 | 81 | 75.6 | 81 | 53.7 | 72.2 | 66.7 | 72 | 78.8 | 79.2 | 75 | 84 | 62.9 | 80.4 | 74.6 |

| 5 | OpenAI GPT-5 | 2025-08-07 | 73.2 | 64.3 | 78.1 | 75.4 | 73.3 | 84.5 | 53.7 | 73.6 | 68.9 | 70.5 | 80.1 | 80.2 | 71.4 | 86.1 | 55.3 | 71.6 | 75.4 |

| 6 | GLM 4.5V | 2025-08-11 | 69.4 | 46 | 65.8 | 63.5 | 77.8 | 76.2 | 56.5 | 81.9 | 74.4 | 59.1 | 65.4 | 82.3 | 83.3 | 80.6 | 59.8 | 79.4 | 73.7 |

| 7 | OpenAI o1 | 2024-12-05 | 68.8 | 62.7 | 71.1 | 72.2 | 80 | 70.2 | 54.6 | 79.2 | 61.1 | 68.9 | 73.1 | 71.9 | 65.5 | 79.2 | 57.6 | 64.7 | 68.4 |

| 8 | Gemini 1.5 Flash | 2024-09-24 | 67.2 | 51.6 | 70.2 | 74.6 | 86.7 | 51.8 | 57.4 | 73.6 | 61.1 | 60.6 | 75 | 85.4 | 84.5 | 68.8 | 53 | 66.7 | 73.7 |

| 9 | Gemma 3 27B | 2025-03-10 | 63.1 | 44.4 | 76.3 | 66.7 | 82.2 | 50.6 | 48.1 | 69.4 | 72.2 | 62.1 | 67.9 | 76 | 77.4 | 64.6 | 41.7 | 59.8 | 71.1 |

| 10 | Qwen2.5 VL 32B | 2025-02-20 | 62.5 | 43.7 | 61.4 | 65.1 | 81.1 | 56 | 60.2 | 70.8 | 67.8 | 49.2 | 66.7 | 70.8 | 72.6 | 68.1 | 53.8 | 62.7 | 66.7 |

| 11 | Claude Sonnet 4 | 2025-05-22 | 60.4 | 46.8 | 64.9 | 64.3 | 71.1 | 56 | 53.7 | 59.7 | 56.7 | 59.8 | 64.1 | 61.5 | 70.2 | 72.2 | 52.3 | 63.7 | 52.6 |

.jsonl file.model_dump from an OpenAI-compatible API call (Gemini / vLLM / SGLang 's OpenAI wrappers accepted).predicted_answer. Also include the function you used to extract predicted_answer from the generated solution.temperature=0 (if applicable) for reproducibility.Example JSONL line

{

"predicted_answer": "A", // one of: A,B,C,D,E or null

"solution_context": { ... }, // openai_wrapper_response.model_dump()

"exam": "TYT Physics", // optional: dataset fields (except image)

"topic": "Heat and Temperature"

}

Email your .jsonl file to

this address.

@misc{yksuniform2025,

title = {YKS Uniform: A Balanced Multimodal Benchmark Covering the Turkish High School Curriculum},

author = {Sert, Egemen and Ertekin, Şeyda},

year = {2025},

howpublished = {\url{https://yks-uniform.github.io/}},

note = {Accessed: 2025-08-23}

}